Congratulations – ChatGPT is renewed with some new super capabilities

On September 25, 2023, OpenAI announced the launch of two new features that extend ChatGPT,

Its most popular AI tool, thereby expanding the ways people can interact with its most advanced and latest model, GPT-4:

The ability to ask questions about pictures and use voice speech.

GPT-4V is the name of the new model, with the “V” standing for Vision.

The new features make GPT-4 a multimodal model – this means that the model can receive input from multiple models.

If until now ChatGPT could only receive text from you – now it can receive both images (visual) and audio (sound) from you – and return results based on these inputs.

It should also be mentioned that multimodality is not the lot of ChatGPT alone, since competitors – Microsoft’s Bing Chat, and Google’s Bard – also support accepting images as input (but not voice, as of this writing).

What does the new model do?

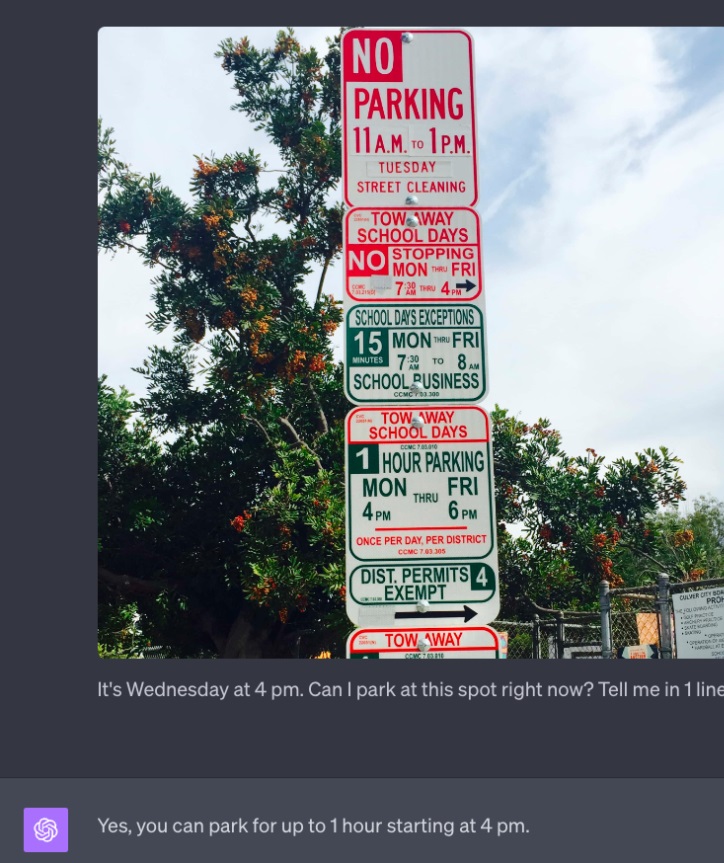

- Image analysis

- For example, if you want to identify a specific plant, you can upload a photo of the plant to the chat and ask it to tell you what plant it is and other details about it.

You can upload funny memes to the chat and ask them to explain to you what the joke is about the meme.

You can upload him a picture of a handwritten math exercise, and he will analyze the exercise and give you a detailed answer (assuming the handwriting is clear enough, and of course – in English).

The functionality of image analysis is more than just a nice gig, it can be used for a variety of needs.

Starting with educational needs, research, and even intelligence needs.

So, for example, if you want to know where a certain photo was taken, you can upload the photo to the chat and it will tell you where the photo was taken (assuming the landscape in the photo is familiar and known enough).

The functionality of image analysis is more than just a nice gig, it can be used for a variety of needs.

Starting with educational needs, research, and even intelligence needs.

So, for example, if you want to know where a certain photo was taken, you can upload the photo to the chat and it will tell you where the photo was taken (assuming the landscape in the photo is familiar and known enough).

2. Use of voice

From now on, the chat will offer the option to use voice input in a two-way way.

This way, you can record yourself talking to the chat instead of typing to it. This can be very convenient for those who work with the chat on a daily basis and use long prompts. Just record!

Chat is blessed with another new voice capability – answering you aloud.

If you prefer voice input for a reason, the chat can give you voice-based answers instead of text. You’ll also be able to choose the voice your chat will answer from 5 voices built into the chat that will be available for you to choose from.

This can be useful, for example, if you want to share a recording of certain content on WhatsApp, if you’re traveling or wearing headphones on a train, or if you’re just a more auditory person who prefers to work with audio instead of text.

But probably the most useful feature isn’t necessarily talking to or hearing the chat – it’s the transcription feature.

With the new capabilities of chat, you can upload an audio file or recording to the chat – and ask them to transcribe what they “hear”.

This feature is very useful for transcribing lectures, YouTube videos, WhatsApp recordings (has a solution been found for the serial message recorders on WhatsApp??).

Missed a lecturer class? Ask him for a recording of the lesson, upload it to the chat, ask him to transcribe it and summarize the main points from the lecture.

The enormous potential for saving time is definitely starting to discover itself.

So how do I use the new features?

To access the new features, you’ll need to have a PLUS subscription of the chat, which costs $20 a month.

Honestly – one of the easiest investments we have ever made.

But for those of you who haven’t subscribed to chat yet, there are also other options:

Bing Chat, Microsoft’s free chatbot, is based on GPT4 itself and uses OpenAI’s latest image generator – DALLE 3 and supports image acceptance as input as well (but not audio input).

So you can try some of GPT4-V’s new features completely free of charge through Bing, and you’ll also get chat access to the internet, which ChatGPT doesn’t have.

Horizon is Israel’s leading body for training artificial intelligence subjects.

Just as you can take 3-hour lectures and ask the chat to summarize them for key points, there are many other areas where you can “shave” a lot of hours that simply burn us processes that are repeated by artificial intelligence.

Our AI Master course for the general public teaches exactly how to use applications such as ChatGPT to become 4-5x more productive in personal life and work.

Let us know how impressed you were with the new features!

Ido Deutsch

founder